The Databricks Lakehouse Platform

This post is a summary of Matei Zaharia’s CIDR 2022 Keynote:

The talk itself is broken into 4 parts:

Lakehouse systems: what are they and why now?

Building lakehouse systems

Challenges in cloud platforms

Ongoing projects

We’ll be focusing on the first two.

Lakehouse systems: what are they and why now?

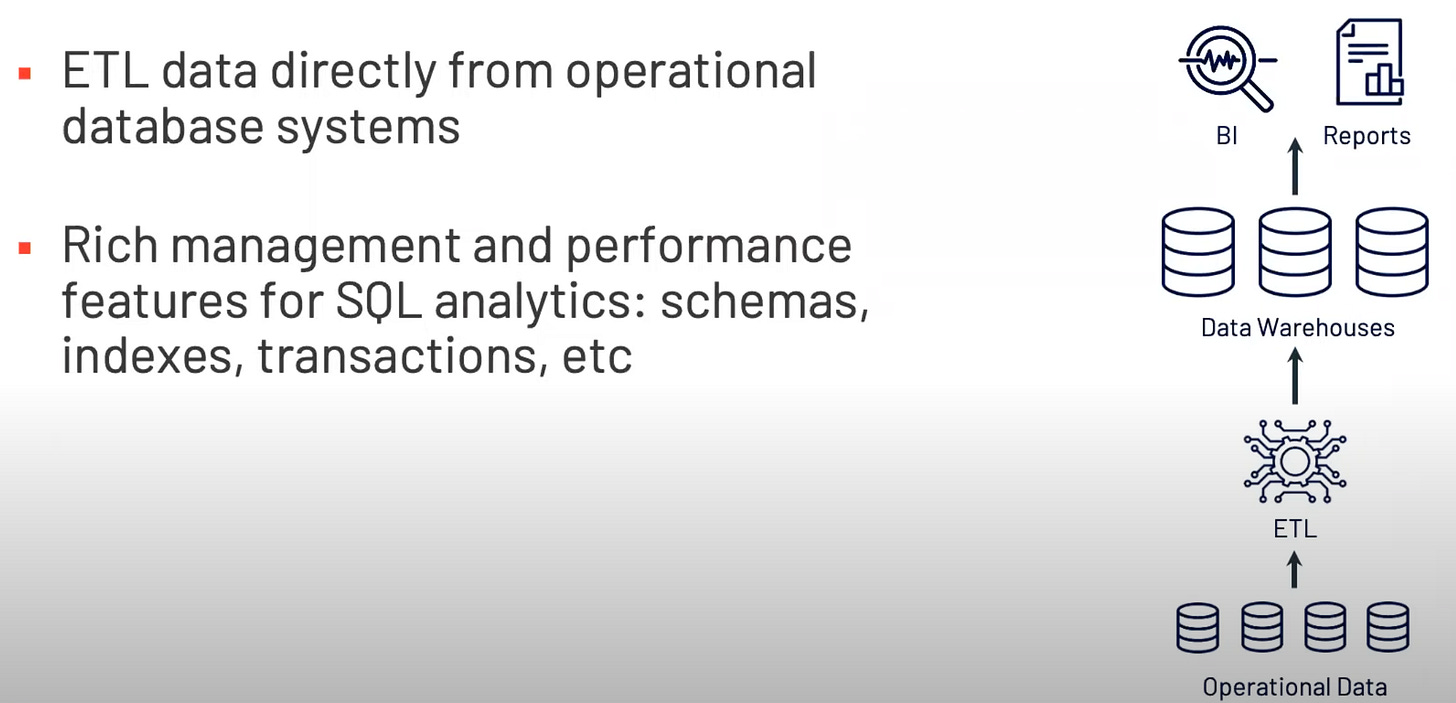

In this part, Matei led us through the evolution of analytical data systems, which started from the concept of data warehouses in the 1980s.

However, several challenges emerged as data analytics became more widely adopted. Specifically, Matei mentioned the following problems for data warehouses:

Could not support rapidly growing unstructured and semi-structured data: time series, logs, images, documents, etc.

High cost to store large datasets.

No support for data science & ML

The idea of Data Lakes was then born as a result of the above challenges.

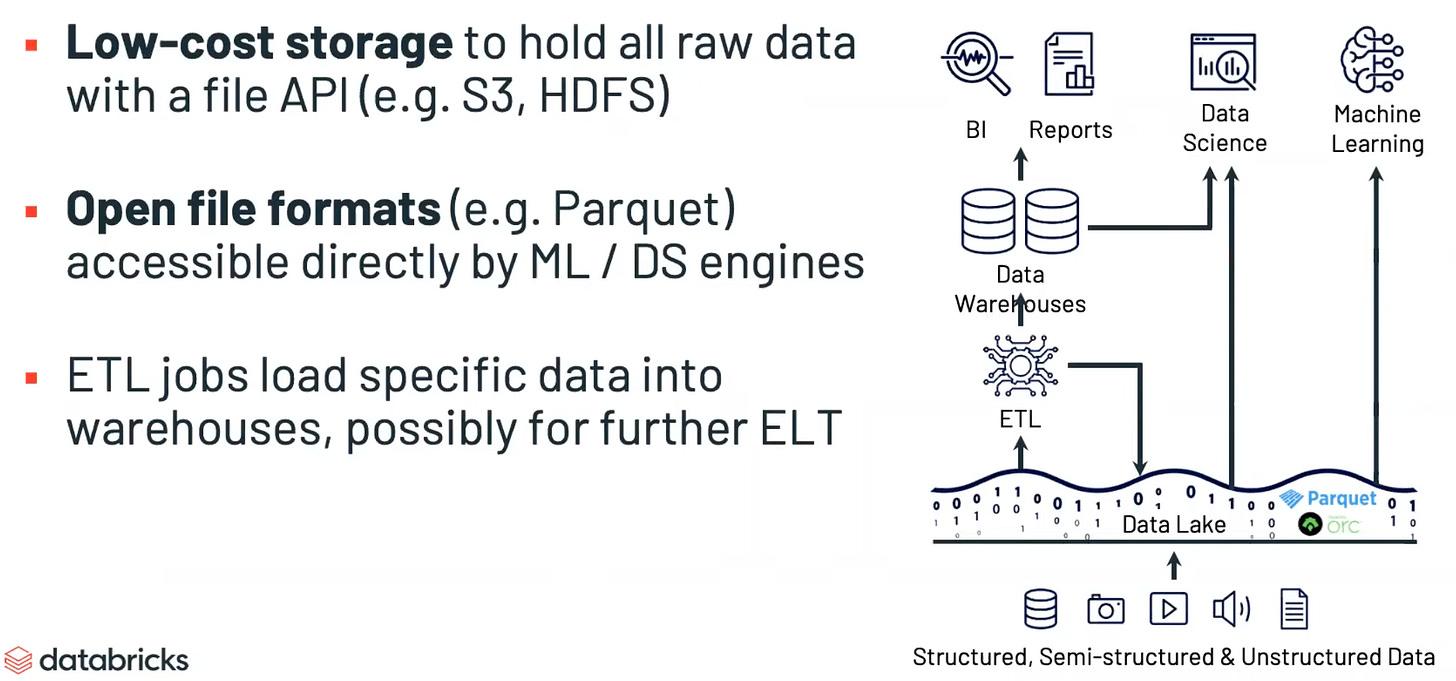

The basic idea of data lakes, as Matei pointed out, is

instead of trying to move things into a structured schema for historical analysis from the beginning, we’re instead just going to have a low-cost storage system that can hold raw data in whatever format it arrives.

The data in these systems is usually stored and accessed via a file-like API such as S3 or HDFS. This also provides flexibility in terms of the data storage format. Consequently, another important aspect of data lakes is that “they are often based on open file formats such as Apache Parquet and these are accessible directly to a wide range of machine learning and data science and common tools”.

On the other hand, data lake itself isn’t an “amazingly useful data management system in that it doesn’t have a lot of functionalities” such as transactions or fine-grained security controls. Therefore, in practice, people often have to run some ETL pipelines to load a subset of the data into a traditional data warehouse for more traditional BI and analytics use cases.

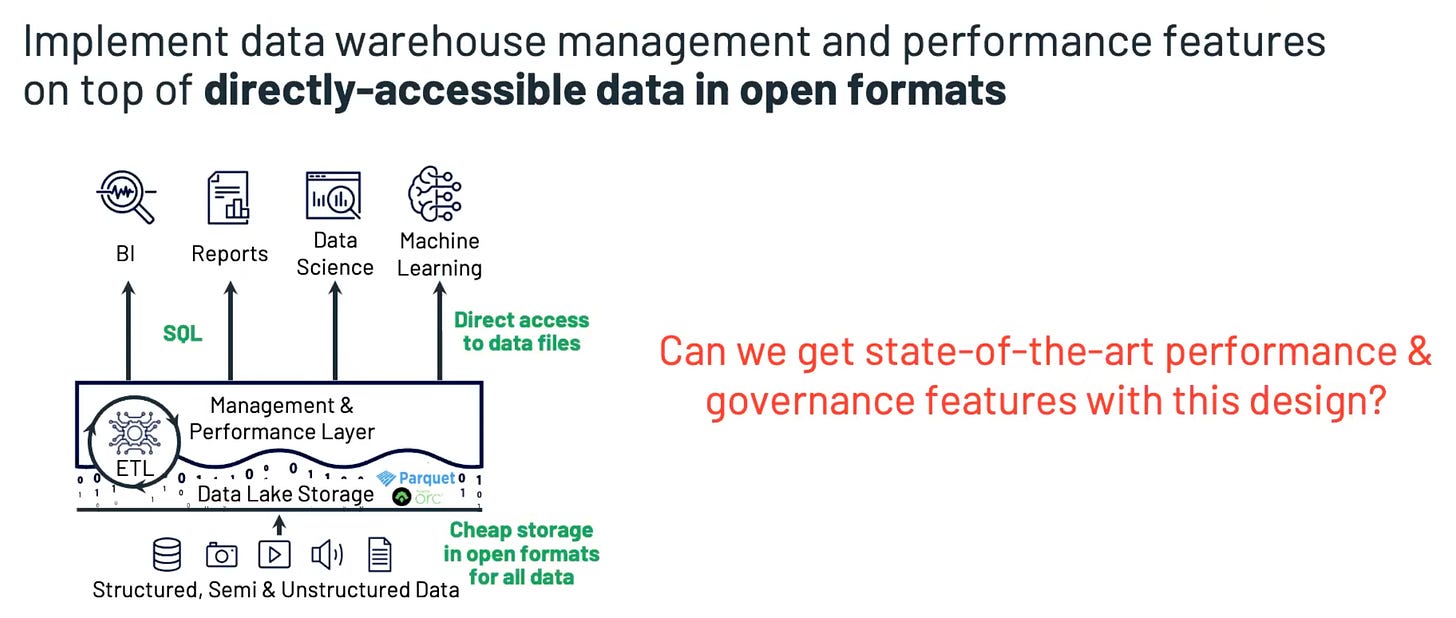

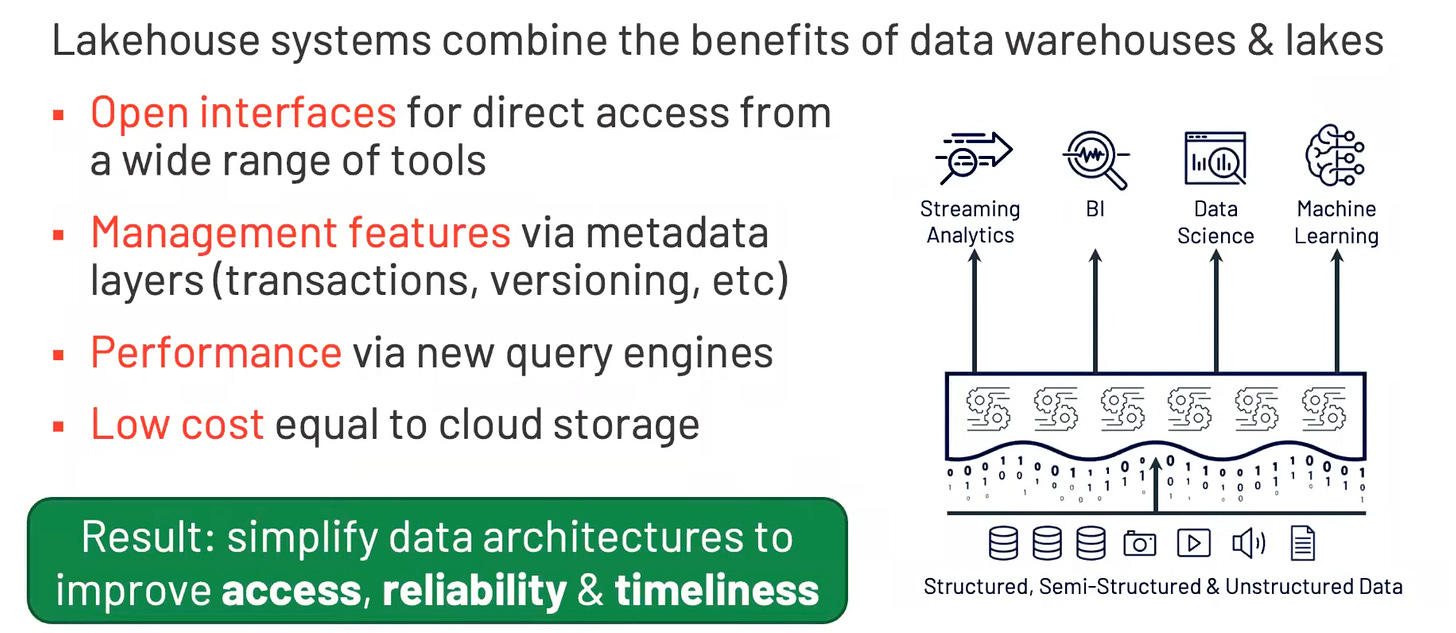

Obviously, having to insert an additional layer of ETL isn’t ideal. So Databricks came up with Delta Lake, their lakehouse system that “implements data warehouse management and performance features on top of directly-accessible data in open formats”

With that, let’s move on to how Databricks implements Delta Lake from a high level.

Building lakehouse systems

The key technologies enabling lakehouse are

Metadata layers on data lakes: add transactions, versioning & more

Lakehouse engine designs: performant SQL on data lake storage

Declarative I/O interfaces for data science & ML

Metadata layers on data lakes

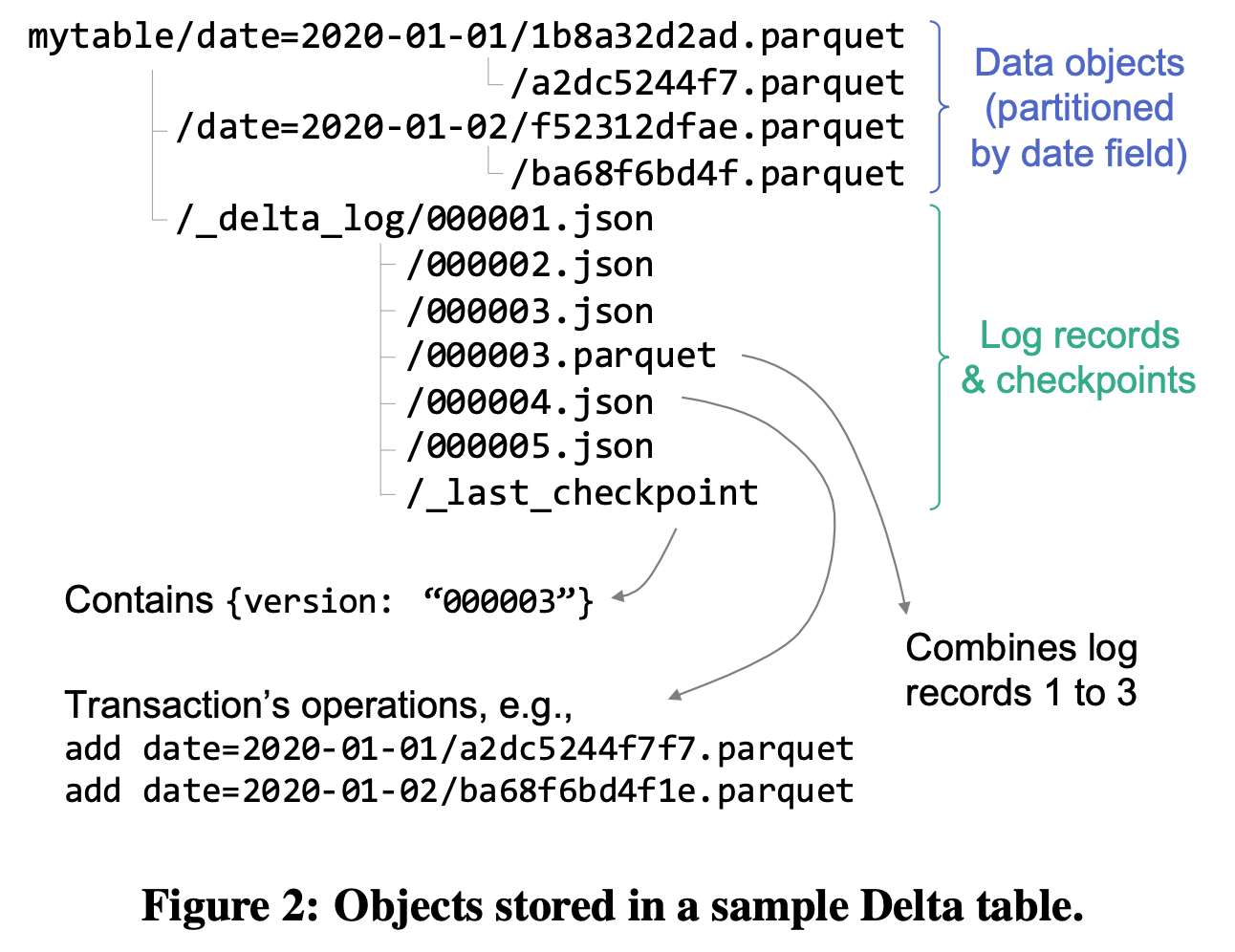

The main idea is to store metadata that tracks which files are part of a particular table version in the data lakes’ underlying storage (e.g. S3 or HDFS) themselves. The Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores paper that we’ll dive into in the next post describes the protocol in great details. Below is a figure from the paper that describes an example delta table layout.

This design made several additional management features possible, such as time travel to old table versions, zero-copy CLONE by forking the log, streaming I/O, etc. We won’t go into these here, but interested readers can hear from Matei’s own words starting from 26:34.

Lakehouse engine designs

The four optimizations that helped enable lakehouse performance are

Auxiliary data structures like statistics and indexes

Data layout optimization within files

Caching hot data in a fast format

Execution optimizations such as vectorization

The first two tricks help minimize I/O for cold data and the latter two help match data warehouse on hot data.

The most interesting point worth particular mentioning is data layout. Delta lake 2.0 just recently open sourced “Z-ordering”, which is a data sorting technique based on space-filling curve (e.g. Z-order curve) that can optimize multi-column data locality. Think of it as a generalization of your typical linear sorting. Here is another excellent post from Databricks that explains how Z-ordering helps queries skip unnecessary data reads.

Declarative I/O interfaces

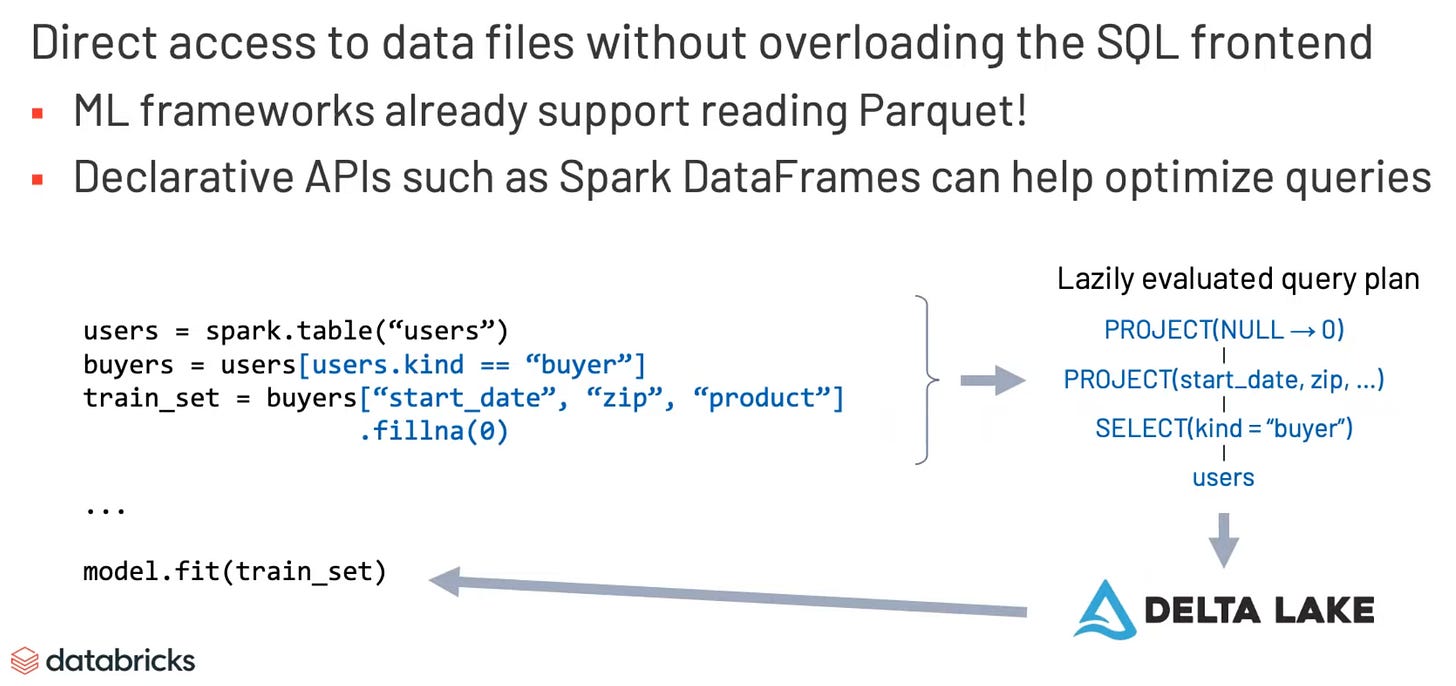

ML workloads often need to process large amounts of data with non-SQL code (e.g. TensorFlow, XGBoost). However, SQL over JDBC/ODBC is painfully slow at this scale. Neither exporting data to a data lake nor maintaining separate copies of data in both data warehouse and data lake is really feasible due to the added complexities.

Lakehouse’s directly-accessible open file format allows ML workloads to access and process the data files without overloading the SQL frontend. Besides, declarative APIs such as Spark DataFrames can help optimize queries, as illustrated below.

Summary

Below is a great summary of lakehouse systems in Matei’s own words.

In the later part of the keynote, Matei also touched upon “challenges in cloud platforms” and “ongoing projects”, both of which are great contents to listen to but are omitted here for the sake of length of this post.

Overall, I highly recommend watching Matei’s original keynote presentation. I hope you find this post useful. Regardless, please feel free to leave a comment. Any feedback is welcome!

In our next post, we’ll dive into some technical details of Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores.